AI inference for Robotics and Drones

Efficient yet powerful AI inference increases flexibility while reducing payload size

Providing “eyes” around any scene

Embedded vision in robotics and drones extends perception to more places, often ones difficult for humans to reach easily. Smaller vehicles can leverage powerful AI inference processing, taking less size, weight, and power, translating to an improved range or more time on scene. Advanced inference techniques also help robotics reduce time-to-productivity with less programming burden.

Structural integrity is necessary for mission-critical infrastructure to remain in service safely. Routine inspections can be time-consuming and hazardous for people tasked with the job. Emergency inspections get even more urgent and sometimes dangerous.

Structural integrity is necessary for mission-critical infrastructure to remain in service safely. Routine inspections can be time-consuming and hazardous for people tasked with the job. Emergency inspections get even more urgent and sometimes dangerous.

Uncrewed drones can quickly perform inspections at high sensor resolution with little risk for human operators. Drones can often reach inside and around structures, providing new perspectives such as views of pipelines or storage tank interiors.

More AI processing in a drone can automate inspection patterns and spot faults in infrastructure without a persistent cloud connection or complex programming. It also allows for enhanced perception using increased-resolution sensors and different modalities. Reduced power consumption means drones go farther and dwell longer.

When monitoring comes to mind, people often think of security, detecting intruders in and around a facility. While surveillance is an important application for drones, it’s not the only one. Activity monitoring can have substantial productivity impacts.

When monitoring comes to mind, people often think of security, detecting intruders in and around a facility. While surveillance is an important application for drones, it’s not the only one. Activity monitoring can have substantial productivity impacts.

Drones repeatedly flying over a facility can see changes occurring between missions. An example is using drones to see items delivered to the correct location for use within a construction site. Or a supplier can use a drone in their yard to view and verify inventory without sending a person out to look. Large-scale operations such as farms, oil fields, and mines can use drones to see where work needs to happen and where workers are.

Drone-based AI can help detect unsafe conditions such as hard hat non-compliance or highlight changes such as movements in vehicles or materials. It can also count how many people or vehicles are in an area, confirming work is in progress.

In an emergency such as a natural disaster, structure fire or collapse, or a major hazardous materials spill, time is critical. Conditions may be unsafe for any victims, responders, or nearby populations. Rapid assessment can save lives.

In an emergency such as a natural disaster, structure fire or collapse, or a major hazardous materials spill, time is critical. Conditions may be unsafe for any victims, responders, or nearby populations. Rapid assessment can save lives.

Whether air, ground, or sea-borne, robotic vehicles can venture into situations without placing first responders at greater risk. Depending on the sensor payload, they may be able to see through obscurants that would thwart human perception. They might even be able to get close enough to set up remote communication with a person trapped in a situation. Precise locations and visual triage can help prioritize and route assistance.

With AI, robotics gain more capability without difficult programming. Image segmentation, and object recognition and tracking improve. Enhanced simultaneous location and mapping (SLAM) helps chart movements accurately.

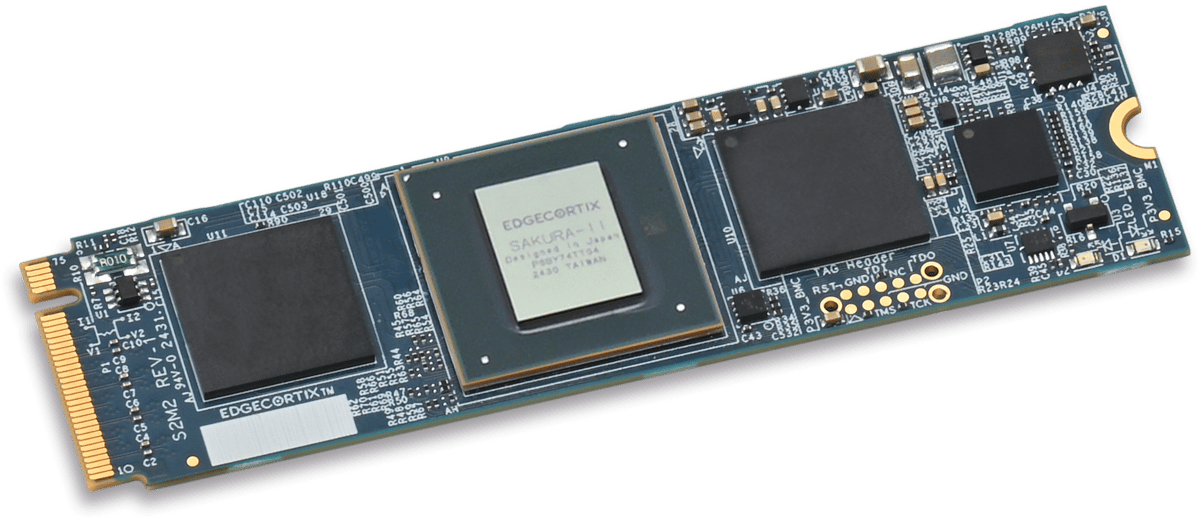

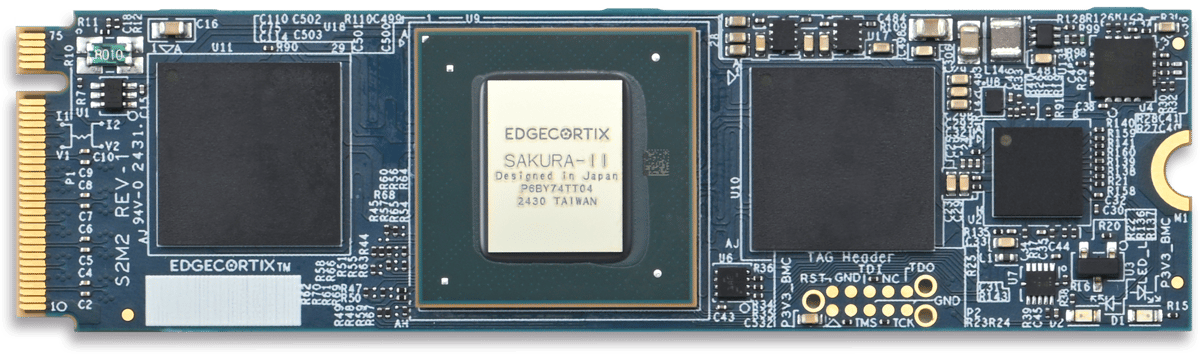

SAKURA-II M.2 Modules and PCIe Cards

EdgeCortix SAKURA-II can be easily integrated into a host system for software development and AI model inference tasks.

Order an M.2 Module or a PCIe Card and get started today!

EdgeCortix Edge AI Platform

Full-Stack Solution: Integrated Platform Increases Ecosystem Value Over Time

Unique Software

Proprietary Architecture

AI Accelerator

Efficient Hardware

Modules and Cards

powered by the latest SAKURA-II

AI Accelerators

Deployable Systems

Given the tectonic shift in information processing at the edge, companies are now seeking near cloud level performance where data curation and AI driven decision making can happen together. Due to this shift, the market opportunity for the EdgeCortix solutions set is massive, driven by the practical business need across multiple sectors which require both low power and cost-efficient intelligent solutions. Given the exponential global growth in both data and devices, I am eager to support EdgeCortix in their endeavor to transform the edge AI market with an industry-leading IP portfolio that can deliver performance with orders of magnitude better energy efficiency and a lower total cost of ownership than existing solutions."

Improving the performance and the energy efficiency of our network infrastructure is a major challenge for the future. Our expectation of EdgeCortix is to be a partner who can provide both the IP and expertise that is needed to tackle these challenges simultaneously."

With the unprecedented growth of AI/Machine learning workloads across industries, the solution we're delivering with leading IP provider EdgeCortix complements BittWare's Intel Agilex FPGA-based product portfolio. Our customers have been searching for this level of AI inferencing solution to increase performance while lowering risk and cost across a multitude of business needs both today and in the future."

EdgeCortix is in a truly unique market position. Beyond simply taking advantage of the massive need and growth opportunity in leveraging AI across many business key sectors, it’s the business strategy with respect to how they develop their solutions for their go-to-market that will be the great differentiator. In my experience, most technology companies focus very myopically, on delivering great code or perhaps semiconductor design. EdgeCortix’s secret sauce is in how they’ve co-developed their IP, applying equal importance to both the software IP and the chip design, creating a symbiotic software-centric hardware ecosystem, this sets EdgeCortix apart in the marketplace.”