Connecting Edge AI Software with PyTorch, TensorFlow Lite, and ONNX Models

Antonio Nevado

Listen to this blog:

Data scientists and others may have concerns moving PyTorch, TensorFlow, and ONNX models to edge AI software applications – MERA makes it easy and is model-agnostic.

PyTorch, TensorFlow, and ONNX are familiar tools for many data scientists and AI software developers. These frameworks run models natively on a CPU or accelerated on a GPU, requiring little hardware knowledge. But ask those same folks to move their applications to edge devices, and suddenly knowing more about AI acceleration hardware becomes essential – and perhaps a bit intimidating for the uninitiated. There is a solution: MERA, the software compiler and run-time framework from EdgeCortix, takes the mystery out of connecting edge AI software with PyTorch, TensorFlow, and ONNX models running in edge AI co-processors or FPGAs.

What’s different about edge AI applications?

In enterprise AI applications, the AI training platform often runs on a server or cloud-based hardware, taking advantage of the practically unlimited performance, power consumption, storage, and mathematical precision, limited only by capital and operating expenses. AI inference may scale down to a physically smaller server-class machine using similar CPU and GPU technology, or stay in the data center or cloud.

Edge AI applications often use different hardware for AI inference, dealing with size, power consumption, precision, and real-time determinism constraints. The host processor might have Arm or RISC-V cores or a smaller, more energy-efficient Intel or AMD processor. Models might run on an edge AI chip with dedicated processing cores or a high-performance PCI accelerator card containing an FPGA programmed with inference logic.

These edge-ready hardware environments may be mysterious territory for data scientists and others who are less hardware-centric. Likely concerns that crop up:

- Do models have to be rewritten, how hard is that, and how long will it take?

- How do models map to hardware for better performance and efficiency?

- What about compute precision – will retraining and verification be required?

- How does AI acceleration work with an application running on a host processor?

Getting an AI application to an edge platform shouldn’t have to jump a discontinuity. Ideally, edge AI application developers would perform model research in a familiar environment – PyTorch, TensorFlow, or ONNX on a workstation, server, or cloud platform – and make an automated conversion to deployment on an edge AI accelerator.

Interpreting PyTorch, TensorFlow, or ONNX models with MERA

MERA powers a smooth transition to edge AI software. Developers work in familiar Python from start to finish instead of another programming language or FPGA terminology. MERA automatically partitions computational graphs between an edge device host processor and AI acceleration hardware, reconfiguring acceleration IP for optimal results.

At a high level, a typical MERA deployment process has these steps.

- Model Zoo: MERA has many open-source, pre-trained models developers can start with and explore. Developers can modify these or create entirely new custom models for their AI inference project.

- Calibration and Quantization: PyTorch, TensorFlow, and ONNX provide built-in model quantization tools for converting models from high-precision formats like 32-bit floating point to 8-bit integer or another format supported in EdgeCortix Dynamic Neural Accelerator (DNA) IP, allowing faster, more efficient hardware execution. Calibration pulls samples from the training data set, runs inference at the new quantization, looks at model node statistics, and adjusts parameters for accuracy. The MERA 1.3.0 release adds quantization capability directly in MERA, a new alternative to using built-in quantization from PyTorch, TensorFlow, or ONNX.

- Model Importing: MERA imports quantized models directly from TorchScript IR, TFLite, or ONNX formats. Before exporting, developers should check to be sure their models are compatible with TensorFlow Lite, the mobile-friendly subset of TensorFlow.

- Compilation: MERA starts with Apache TVM at its front end for importing and computational graph offloading, and uses an all-new EdgeCortix extended back end for compilation and optimization. It outputs a set of binary artifacts, including parameter data and a shared library loadable from Python and C++.

- Interpreter and Simulator: A development host-based Interpreter is available in MERA to aid initial development and debugging, running at the same precision as the EdgeCortix DNA IP. Also available is a complete development host-based simulator, using a cycle-accurate representation of EdgeCortix DNA IP matching target behavior, helpful in evaluating latency and determinism before actual target hardware is available.

- Deployment: MERA performs target cross-compilation to an edge host processor and acceleration hardware in an SoC or an FPGA-based PCI accelerator card in a system. It understands EdgeCortix DNA IP configuration rules, automatically compiling code for the correct version. It also handles configuration optimization and workload scheduling for maximizing utilization and minimizing latency.

An easier, model-agnostic workflow for edge AI software

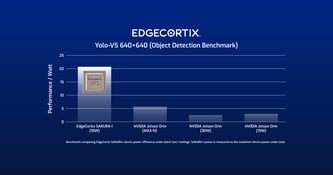

Data scientists and Python-first developers can quickly get applications into edge devices running EdgeCortix DNA IP with MERA. The development process is similar whether the hardware choice is an EdgeCortix SAKURA edge AI co-processor, a system-on-chip designed with EdgeCortix DNA IP, or an EdgeCortix Inference Pack on BittWare FPGA cards.

The latest release, MERA 1.3.0, is available for download from the EdgeCortix GitHub repository, with code files, pre-trained applications in the Model Zoo, and documentation. Using MERA as a companion tool to EdgeCortix-enabled hardware, teams can worry less about edge AI hardware complexity and focus more on edge AI software robustness and performance.

How can MERA help your edge AI project?

Stay Ahead of the Curve: Subscribe to Our Blog for the Latest Edge AI Technology Trends!

Antonio Nevado

DNN Compiler Engineer

Related Posts

Efficient Edge AI Chips with Reconfigurable Accelerators